Improving Listify

Listify’s latency, while tolerable, could do with some improvement (performance was a secondary concern while building it; I felt that the application functionality was far more interesting). So I’ve decided to address this issue and blog about it as I go along.

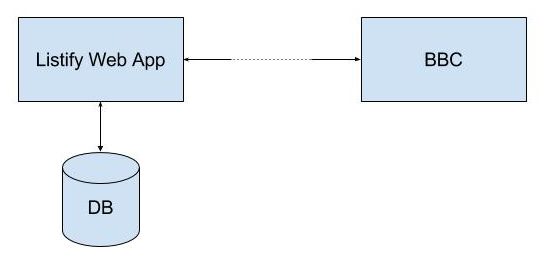

Quick recap: Listify retrieves BBC radio schedule information in order to create Spotify playlists (aside: unfortunately the BBC don’t provide a clean API to retrieve this information, so there’s a fair bit of scraping going on). In order to retrieve that information, the application needs to send requests to the BBC Radio web site.

So, for instance, to create a Spotify playlist, the application must retrieve the radio tracklist for that particular episode of that particular show. This involves making a relatively-expensive external network request to grab that information. Another similar request is then made to create the Spotify playlist. Fortunately the retrieved information is cached in Listify’s local database, so that if the playlist for this episode is requested again, the information is instead retrieved more quickly from the database. But of course, the first user after that playlist still has to take that initial latency hit! Let’s fix this….

Here are some sample numbers from a basic test, using Apache Benchmark. I’ve got a (Docker) containerised version of the web app running locally, so these numbers are more useful because they exclude the network time from a user machine to the Listify server. Here I’m hitting the show list for Radio 1, 100 times serially.

$ ab -n 100 localhost/shows/radio1

...

Percentage of the requests served within a certain time (ms)

50% 34

95% 49

100% 1249 (longest request)Now, generally the response time isn’t so bad, but as you can see the longest response time sucks – these are the times for the first visitor to that page. Sure, that cost is amortized over the subsequent requests, but it’s still an unpleasant experience for that first user!

One solution to this is to pre-warm the cache. That is, have an automated process run ahead of time doing the job of the first visitor, so that no real human visitors have to endure that latency. My, rather simple, approach is to have a cron job run nightly that uses wget to recursively crawl the site and hit each page.

wget -r --delete-after localhost/stationsThis is the basic form of the command, but there are additional options (e.g. ignore robots.txt, specifying a max recursive depth, waiting in between requests, ignore certain pages, etc.).

And now, with a warm cache, here are the new numbers:

$ ab -n 100 localhost/shows/radio1

...

Percentage of the requests served within a certain time (ms)

50% 33

95% 47

100% 91 (longest request)A big improvement!

But there are, of course, some downsides:

- The system may waste time and disk space caching content that may not be used. This is mitigated by only caching the info for the most popular stations.

- Since this cache is only warmed nightly, despite new content continuously being made available throughout the day, some newer content may result in a cache miss. I think this is tolerable because the cache misses now happen less often.

- At the moment, this cache initialisation is a pretty resource-intensive task – essentially, deliberately inducing peak time usage. This is OK for now, since the cache warming happens during a quiet period at night, so has minimal impact on other users of the system.

That’s all for now. More to come!

Bluesky

Bluesky